I have a razer keyboard with separate LEDs for each key, and was curious whether I can address them using Python. As it turns out, there is linux driver and python library that makes it possible. I do not have specific usecase in mind, it could be useful for displaying notifications or progress bar — or perhaps something else entirely.

The setup is relatively straightforward. I just had to install the driver: https://openrazer.github.io/#download. They are kind enough to provide some examples: https://github.com/openrazer/openrazer/blob/master/examples/custom_zones.py

I changed the example a little to display the current outside temperature using LEDs placed on function row:

import time

from openrazer.client import DeviceManager

from pyowm.owm import OWM

# Create a DeviceManager. This is used to get specific devices

device_manager = DeviceManager()

print("Found {} Razer devices".format(len(device_manager.devices)))

devices = device_manager.devices

for device in devices:

if device.fx.advanced and device.name == "{{your keyboard}}":

keyboard = device

print("Selected device: " + device.name + " (" + device.serial + ")")

break

if not keyboard:

print("No suitable device found.")

# Disable daemon effect syncing.

# Without this, the daemon will try to set the lighting effect to every device.

device_manager.sync_effects = False

# Replace with your OpenWeatherMap API key

api_key = "{{redacted}}"

owm = OWM(api_key)

# Get weather for a specific location

city = "Wroclaw"

mgr = owm.weather_manager()

observation = mgr.weather_at_place(city)

while True:

weather = observation.weather

buttons_to_light = round(weather.temperature('celsius')['temp'])

for i in range(0, buttons_to_light):

keyboard.fx.advanced.matrix[0,1+i]=(0,0,255)

keyboard.fx.advanced.draw()

time.sleep(60)Another example, displaying server load, fetching it first from prometheus database:

import time

from openrazer.client import DeviceManager

import requests

# Create a DeviceManager. This is used to get specific devices

device_manager = DeviceManager()

print("Found {} Razer devices".format(len(device_manager.devices)))

devices = device_manager.devices

for device in devices:

if device.fx.advanced and device.name == "{{your keyboard}}":

keyboard = device

print("Selected device: " + device.name + " (" + device.serial + ")")

break

if not keyboard:

print("No suitable device found.")

device_manager.sync_effects = False

# Define the Prometheus server URL and query

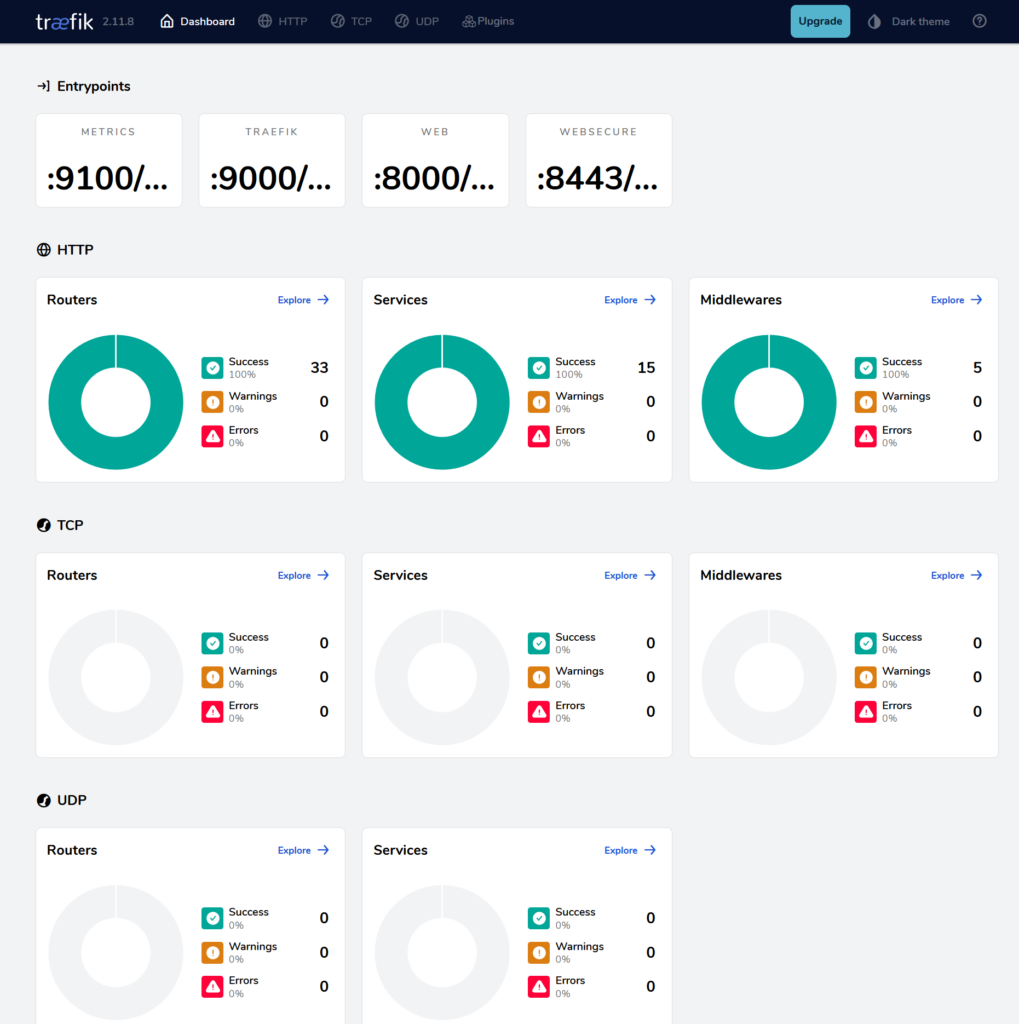

prometheus_url = "http://192.168.1.43:9999/api/v1/query"

query = "node_load5{instance='fedora-1.home'}"

while True:

response = requests.get(prometheus_url, params={"query": query})

data = response.json()

result = data["data"]["result"]

timestamp, value = result[0]["value"]

load = round(float(value))

for i in range(0, load):

keyboard.fx.advanced.matrix[0,1+i]=(255,0,0)

keyboard.fx.advanced.draw()

time.sleep(60)